Architecture That Thinks Back

The teams I talk to aren’t asking “should we use AI?” anymore. They’re asking “how do we trust what it builds?” That’s the right question. When AI writes code that ships to production, the architecture conversation changes completely. It’s no longer about boundaries - services, APIs, ownership. It’s about cognition: how much does this component understand about the system it’s changing?

Developers are shifting from being the only authors to being orchestrators. Not losing agency - gaining a different kind. But that requires a different kind of design - one where context isn’t just documentation; it’s architecture.

Feedback Loops Are Everything

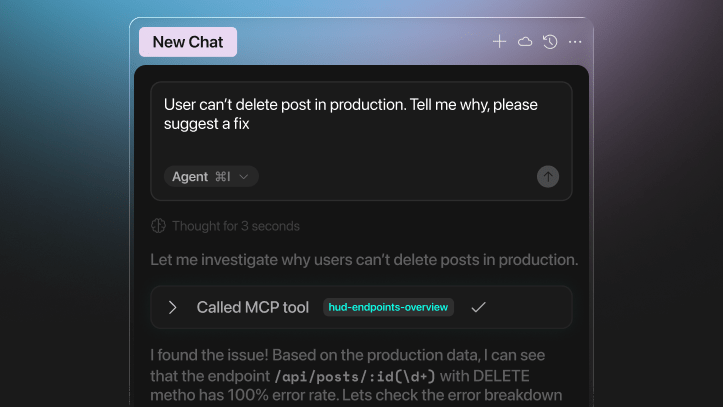

Here’s what we’ve seen work: teams that connect code generation directly to runtime signals learn exponentially faster. Performance data. Dependency graphs. Business impact metrics.

Without that feedback, AI is just a blind accelerator. It’ll write code fast, sure. But will it write good code? Will it understand what breaks when you change that one function three layers deep? A new developer - human or agent - can contribute meaningful code on day one now. That’s wild. But what really matters is what happens after merge. The teams that build tight feedback loops between generation and validation - those are the ones moving fast and staying stable.

The Metrics We Thought We Wanted

Every engineering leader loves a chart that goes up and to the right. PRs. Commits. Lines of code. AI makes all those numbers spike immediately. And they tell you absolutely nothing.

The real signals live downstream:

- How stable are we after release?

- What’s our regression frequency?

- How fast do we recover when things break?

AI shifts the bottleneck. It’s not writing code anymore - it’s validating it. The best teams I’ve seen have rebuilt their metrics around reliability, not vanity speed. They measure how quickly they recover, not how quickly they ship.

Organizations That Learn

This came up again and again on the panel: the hardest part isn’t teaching machines to code - it’s teaching organizations to learn. AI adoption doesn’t fail because the models aren’t good enough. It fails because the process isn’t aligned. When every engineer is experimenting in isolation, you get chaos.

The turning point happens when AI stops being a personal productivity hack and becomes a shared capability - with validation, accountability, and yes, guardrails. Guardrails get a bad rap. People think they slow you down. But guardrails are what let you move fast without breaking production. They’re what turn experimentation into engineering.

What’s Actually Missing

Models are already powerful enough for most of what we need. What’s missing is context. AI still struggles to reason about live systems - how they perform under load, how they degrade, how they recover. Until agents can see the world their code runs in - not just the codebase but its live behavior - they’re still guessing.

That’s the next frontier: making AI production-aware. Connecting it directly to the signals that describe how systems actually behave in reality. That’s when we move from “AI that codes” to “AI that understands.”

The Shift Is Already Here

Panels like “AI in the Trenches” remind me that this transformation isn’t coming - it’s already happening. Quietly, inside teams, inside release cycles, inside every code review where someone has to decide whether to trust the generated code. The next wave of progress won’t come from faster generation. It’ll come from systems - human and machine - that can learn from how their code lives in the world.

If you’re curious about the full conversation and want to dive deeper into real-world AI adoption patterns, check out the InfoQ eMag: AI-Assisted Development - Real-World Patterns, Pitfalls, and Production Readiness.